Kaggle House Price ML

Page content

강의 홍보

- 취준생을 위한 강의를 제작하였습니다.

- 본 블로그를 통해서 강의를 수강하신 분은 게시글 제목과 링크를 수강하여 인프런 메시지를 통해 보내주시기를 바랍니다.

스타벅스 아이스 아메리카노를 선물로 보내드리겠습니다.

- [비전공자 대환영] 제로베이스도 쉽게 입문하는 파이썬 데이터 분석 - 캐글입문기

공지

- 현재 책 출판 준비 중입니다.

- 구체적인 설명은 책이 출판된 이후에 요약해서 올리도록 합니다.

이전 글

- Kaggle Feature Engineering - House Price

- 이전 글에서, Kaggle API, Feature Engineering에 대한 코드를 정리했으니, 참고하기를 바란다.

머신러닝 모형 학습 및 평가

데이터셋 분리 및 교차 검증

X = all_df.iloc[:len(y), :]

X_test = all_df.iloc[len(y):, :]

X.shape, y.shape, X_test.shape

((1458, 258), (1458,), (1459, 258))

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.25, random_state = 0)

X_train.shape, X_test.shape, y_train.shape, y_test.shape

((1093, 258), (365, 258), (1093,), (365,))

평가지표

MAE

import numpy as np

def mean_absolute_error(y_true, y_pred):

error = 0

for yt, yp in zip(y_true, y_pred):

error = error + np.abs(yt-yp)

mae = error / len(y_true)

return mae

MSE

import numpy as np

def mean_squared_error(y_true, y_pred):

error = 0

for yt, yp in zip(y_true, y_pred):

error = error + (yt - yp) ** 2

mse = error / len(y_true)

return mse

RMSE

import numpy as np

def root_rmse_squared_error(y_true, ypred):

error = 0

for yt, yp in zip(y_true, y_pred):

error = error + (yt - yp) ** 2

mse = error / len(y_true)

rmse = np.round(np.sqrt(mse), 3)

return rmse

Test1

y_true = [400, 300, 800]

y_pred = [380, 320, 777]

print("MAE:", mean_absolute_error(y_true, y_pred))

print("MSE:", mean_squared_error(y_true, y_pred))

print("RMSE:", root_rmse_squared_error(y_true, y_pred))

MAE: 21.0

MSE: 443.0

RMSE: 21.048

Test2

y_true = [400, 300, 800, 900]

y_pred = [380, 320, 777, 600]

print("MAE:", mean_absolute_error(y_true, y_pred))

print("MSE:", mean_squared_error(y_true, y_pred))

print("RMSE:", root_rmse_squared_error(y_true, y_pred))

MAE: 90.75

MSE: 22832.25

RMSE: 151.103

RMSE with Sklean

from sklearn.metrics import mean_squared_error

def rmsle(y_true, y_pred):

return np.sqrt(mean_squared_error(y_true, y_pred))

모형 정의 및 검증 평가

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import KFold, cross_val_score

from sklearn.linear_model import LinearRegression

def cv_rmse(model, n_folds=5):

cv = KFold(n_splits=n_folds, random_state=42, shuffle=True)

rmse_list = np.sqrt(-cross_val_score(model, X, y, scoring='neg_mean_squared_error', cv=cv))

print('CV RMSE value list:', np.round(rmse_list, 4))

print('CV RMSE mean value:', np.round(np.mean(rmse_list), 4))

return (rmse_list)

n_folds = 5

rmse_scores = {}

lr_model = LinearRegression()

score = cv_rmse(lr_model, n_folds)

print("linear regression - mean: {:.4f} (std: {:.4f})".format(score.mean(), score.std()))

rmse_scores['linear regression'] = (score.mean(), score.std())

CV RMSE value list: [0.139 0.1749 0.1489 0.1102 0.1064]

CV RMSE mean value: 0.1359

linear regression - mean: 0.1359 (std: 0.0254)

첫번째 최종 예측 값 제출

from sklearn.model_selection import cross_val_predict

X = all_df.iloc[:len(y), :]

X_test = all_df.iloc[len(y):, :]

X.shape, y.shape, X_test.shape

lr_model_fit = lr_model.fit(X, y)

final_preds = np.floor(np.expm1(lr_model_fit.predict(X_test)))

print(final_preds)

[117164. 158072. 187662. ... 173438. 115451. 219376.]

submission = pd.read_csv("sample_submission.csv")

submission.iloc[:,1] = final_preds

print(submission.head())

submission.to_csv("The_first_regression.csv", index=False)

Id SalePrice

0 1461 117164.0

1 1462 158072.0

2 1463 187662.0

3 1464 197265.0

4 1465 199692.0

Kaggle 업데이트

-

먼저 경진대회 Submission 파일을 클릭한 후, 파일을 업르도 한다.

-

모형 제출 후, 최종 스코어 및 등위표를 확인한다.

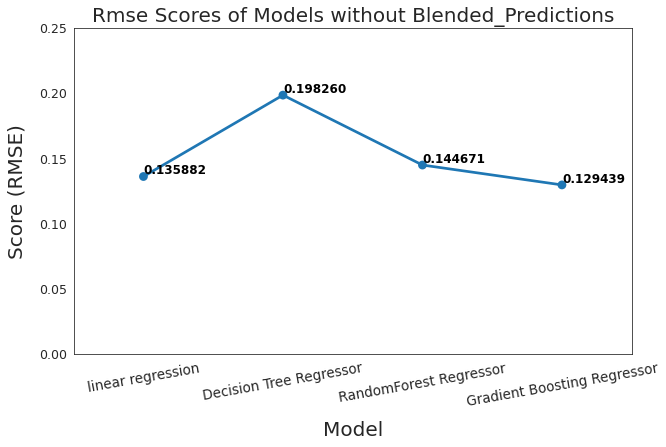

모형 알고리즘 추가

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor

from sklearn.tree import DecisionTreeRegressor

from sklearn.linear_model import LinearRegression

# LinearRegresison

lr_model = LinearRegression()

# Tree Decision

tree_model = DecisionTreeRegressor()

# Random Forest Regressor

rf_model = RandomForestRegressor()

# Gradient Boosting Regressor

gbr_model = GradientBoostingRegressor()

score = cv_rmse(lr_model, n_folds)

print("linear regression - mean: {:.4f} (std: {:.4f})".format(score.mean(), score.std()))

rmse_scores['linear regression'] = (score.mean(), score.std())

CV RMSE value list: [0.139 0.1749 0.1489 0.1102 0.1064]

CV RMSE mean value: 0.1359

linear regression - mean: 0.1359 (std: 0.0254)

score = cv_rmse(tree_model, n_folds)

print("Decision Tree Regressor - mean: {:.4f} (std: {:.4f})".format(score.mean(), score.std()))

rmse_scores['Decision Tree Regressor'] = (score.mean(), score.std())

CV RMSE value list: [0.2005 0.2096 0.2233 0.182 0.176 ]

CV RMSE mean value: 0.1983

Decision Tree Regressor - mean: 0.1983 (std: 0.0174)

score = cv_rmse(rf_model, n_folds)

print("RandomForest Regressor - mean: {:.4f} (std: {:.4f})".format(score.mean(), score.std()))

rmse_scores['RandomForest Regressor'] = (score.mean(), score.std())

CV RMSE value list: [0.1489 0.1574 0.1448 0.142 0.1304]

CV RMSE mean value: 0.1447

RandomForest Regressor - mean: 0.1447 (std: 0.0088)

score = cv_rmse(gbr_model, n_folds)

print("Gradient Boosting Regressor - mean: {:.4f} (std: {:.4f})".format(score.mean(), score.std()))

rmse_scores['Gradient Boosting Regressor'] = (score.mean(), score.std())

CV RMSE value list: [0.1378 0.1368 0.1324 0.1226 0.1176]

CV RMSE mean value: 0.1294

Gradient Boosting Regressor - mean: 0.1294 (std: 0.0080)

fig, ax = plt.subplots(figsize=(10, 6))

ax = sns.pointplot(x=list(rmse_scores.keys()), y=[score for score, _ in rmse_scores.values()], markers=['o'], linestyles=['-'], ax=ax)

for i, score in enumerate(rmse_scores.values()):

ax.text(i, score[0] + 0.002, '{:.6f}'.format(score[0]), horizontalalignment='left', size='large', color='black', weight='semibold')

ax.set_ylabel('Score (RMSE)', size=20, labelpad=12.5)

ax.set_xlabel('Model', size=20, labelpad=12.5)

ax.tick_params(axis='x', labelsize=13.5, rotation=10)

ax.tick_params(axis='y', labelsize=12.5)

ax.set_ylim(0, 0.25)

ax.set_title('Rmse Scores of Models without Blended_Predictions', size=20)

fig.show()

lr_model_fit = lr_model.fit(X, y)

tree_model_fit = tree_model.fit(X, y)

rf_model_fit = rf_model.fit(X, y)

gbr_model_fit = gbr_model.fit(X, y)

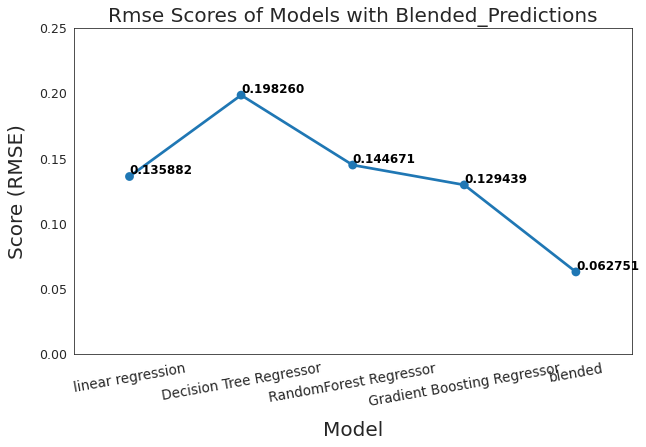

def blended_learning_predictions(X):

blended_score = (0.3 * lr_model_fit.predict(X)) + \

(0.1 * tree_model_fit.predict(X)) + \

(0.3 * gbr_model_fit.predict(X)) + \

(0.3* rf_model_fit.predict(X))

return blended_score

blended_score = rmsle(y, blended_learning_predictions(X))

rmse_scores['blended'] = (blended_score, 0)

print('RMSLE score on train data:')

print(blended_score)

RMSLE score on train data:

0.06275101079147864

fig, ax = plt.subplots(figsize=(10, 6))

ax = sns.pointplot(x=list(rmse_scores.keys()), y=[score for score, _ in rmse_scores.values()], markers=['o'], linestyles=['-'], ax=ax)

for i, score in enumerate(rmse_scores.values()):

ax.text(i, score[0] + 0.002, '{:.6f}'.format(score[0]), horizontalalignment='left', size='large', color='black', weight='semibold')

ax.set_ylabel('Score (RMSE)', size=20, labelpad=12.5)

ax.set_xlabel('Model', size=20, labelpad=12.5)

ax.tick_params(axis='x', labelsize=13.5, rotation=10)

ax.tick_params(axis='y', labelsize=12.5)

ax.set_ylim(0, 0.25)

ax.set_title('Rmse Scores of Models with Blended_Predictions', size=20)

fig.show()

submission.iloc[:,1] = np.floor(np.expm1(blended_predictions(X_test)))

submission.to_csv("The_second_regression.csv", index=False)

- 개선된 결과를 확인한다.