S3 with Python Basic Tutorial

Page content

Bucket 만들기

- Bucket을 만들어보도록 한다.

import boto3

print(boto3.__version__)

1.23.5

bucket = boto3.resource('s3')

response = bucket.create_bucket(

Bucket = "your_bucket_name",

ACL="private", # public-read

CreateBucketConfiguration = {

'LocationConstraint' : 'ap-northeast-2'

}

)

print(response)

s3.Bucket(name='your_bucket_name')

- 버킷 대시보드에서 실제 Bucket이 만들어졌는지 확인한다.

Client Bucket

- 이번에는 client 버킷을 생성한다.

client = boto3.client('s3')

response = client.create_bucket(

Bucket = "your_bucket_name",

ACL = "private",

CreateBucketConfiguration = {

'LocationConstraint' : 'ap-northeast-2'

}

)

print(response)

{'ResponseMetadata': {'RequestId': '1X0BAXRG653Q7Y61', 'HostId': 'WwKyxNBcd1V9x6D/WZn8twMKSWKBnkwVCPWtvarZvyNSSvqr7Q77J6OFAdWuYAwiv/nQfXoW/0U=', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amz-id-2': 'WwKyxNBcd1V9x6D/WZn8twMKSWKBnkwVCPWtvarZvyNSSvqr7Q77J6OFAdWuYAwiv/nQfXoW/0U=', 'x-amz-request-id': '1X0BAXRG653Q7Y61', 'date': 'Wed, 25 May 2022 03:16:52 GMT', 'location': 'http://your_bucket_name.s3.amazonaws.com/', 'server': 'AmazonS3', 'content-length': '0'}, 'RetryAttempts': 0}, 'Location': 'http://your_bucket_name.s3.amazonaws.com/'}

Resource와 Client의 차이

- Resource

- high-level, 객체지향적 인터페이스

- resource description에 의해 만들어짐

- 식별자(identifier)와 속성(attribute)을 사용

- 자원에 대한 조작 위주

- Client

- low-level 인터페이스

- service description에 의해 만들어짐

- botocore 수준의 client를 공개(botocore는 AWS CLI와 boto3의 기초가 되는 라이브러리)

- AWS API와 1:1 매핑됨

- 메소드가 스네이크 케이스로 정의되어 있음

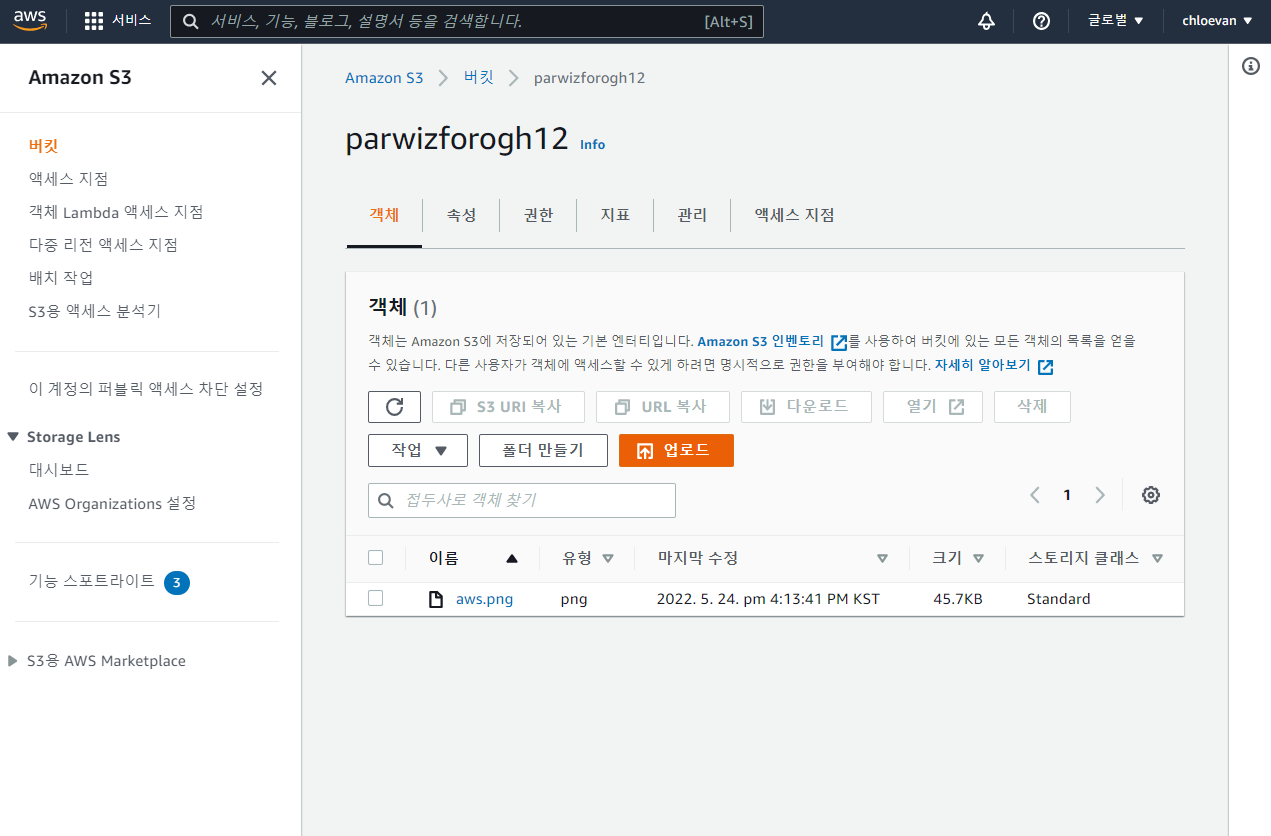

이미지 업로드 및 조회

- 일반적으로 이미지를 업로드 하는 코드는 다음과 같다.

- 단, Django나 Flask 등을 활용하여 이미지를 업로드할 때는 코드 작성 방법이 달라지니 참고용으로 확인한다.

- 코드 실행 후, Web UI에서 직접 확인한다.

client = boto3.client('s3')

# 이미지는 각자 지정한다.

with open('your/folder/aws.png', 'rb') as f:

data = f.read()

response = client.put_object(

ACL="public-read-write",

Bucket = "your_bucket_name",

Body=data,

Key='aws.png'

)

print(response)

{'ResponseMetadata': {'RequestId': 'W2G68SP92N9QX2KW', 'HostId': 'CHigC5S5mAPPHgNQLDnIoT/TkokxD1aXpd0ho45qAEFYWlxvsDGi7OrfnW4hmpby1fZP7wUWCBw=', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amz-id-2': 'CHigC5S5mAPPHgNQLDnIoT/TkokxD1aXpd0ho45qAEFYWlxvsDGi7OrfnW4hmpby1fZP7wUWCBw=', 'x-amz-request-id': 'W2G68SP92N9QX2KW', 'date': 'Tue, 24 May 2022 07:13:41 GMT', 'etag': '"b4597ef3041410d11360b8d7e66616b8"', 'server': 'AmazonS3', 'content-length': '0'}, 'RetryAttempts': 0}, 'ETag': '"b4597ef3041410d11360b8d7e66616b8"'}

- 이번에는 파일을 다운로드 받아본다.

import boto3

import botocore

BUCKET_NAME = 'your_bucket_name' # 버킷 이름

FILE_NAME = 'aws.png' # S3 파일 이름

LOCAL_FILENAME = 'my_local_image.jpg' # 로컬 파일 이름

def download_file(bucketName, s3_fileName, local_fileName):

s3 = boto3.client('s3')

try:

s3.download_file(bucketName, s3_fileName, LOCAL_FILENAME)

except botocore.exceptions.ClientError as e:

if e.response['Error']['Code'] == "404":

print("The object does not exist.")

else:

raise

download_file(BUCKET_NAME, FILE_NAME, LOCAL_FILENAME)

- 이번에는 이미지를 다운로드 받아 직접 보여주기를 해본다.

import matplotlib.pyplot as plt

import matplotlib.image as img

import tempfile

def display(bucketName, s3_fileName):

s3 = boto3.resource('s3')

try:

img_object = s3.Object(bucketName, s3_fileName)

tmp = tempfile.NamedTemporaryFile()

with open(tmp.name, 'wb') as f:

img_object.download_fileobj(f)

img_file = img.imread(tmp.name)

plt.imshow(img_file)

plt.show()

# 파일 없을 때 에러 메시지 뜨게 해주기

except botocore.exceptions.ClientError as e:

if e.response['Error']['Code'] == "404":

print("The object does not exist.")

else:

raise

BUCKET_NAME = 'your_bucket_name' # 버킷 이름

FILE_NAME = 'aws.png' # S3 파일 이름

display(BUCKET_NAME, FILE_NAME)

FILE_NAME = 'aws2.png' # S3 파일 이름

display(BUCKET_NAME, FILE_NAME)

The object does not exist.

모든 버킷 조회

- 먼저 Client 방식으로 조회를 해본다.

bucket = boto3.client('s3')

response = bucket.list_buckets()

print("Listing all buckets")

for bucket in response['Buckets']:

print(bucket['Name'])

Listing all buckets

elasticbeanstalk-ap-northeast-2-381282212299

humanbucket-test

your_bucket_name

your_bucket_name

- 이번에는 resource 방식으로 조회를 해본다.

resource = boto3.resource('s3')

iterator = resource.buckets.all()

print("Listing all buckets")

for bucket in iterator:

print(bucket.name)

Listing all buckets

elasticbeanstalk-ap-northeast-2-381282212299

humanbucket-test

your_bucket_name

your_bucket_name

Bucket 삭제

- 이번에는 버킷을 삭제하도록 한다.

client = boto3.client('s3')

bucket_name = "your_bucket_name"

client.delete_bucket(Bucket=bucket_name)

print("S3 Bucket has been deleted")

S3 Bucket has been deleted

- 삭제된 bucket을 다시 생성한다.

bucket = boto3.resource('s3')

response = bucket.create_bucket(

Bucket = "your_bucket_name",

ACL="private", # public-read

CreateBucketConfiguration = {

'LocationConstraint' : 'ap-northeast-2'

}

)

print(response)

s3.Bucket(name='your_bucket_name')

- 이번에는 resource 방식으로 생성된 버킷을 삭제한다.

resource = boto3.resource('s3')

bucket_name = "your_bucket_name"

s3_bucket =resource.Bucket(bucket_name)

s3_bucket.delete()

print(" This {} bucket has been deleted ".format(s3_bucket))

This s3.Bucket(name='your_bucket_name') bucket has been deleted

객체 기 저장된 버킷 삭제

- 여러 파일들이 있는 경우 삭제가 안될 수 있다.

- 따라서 각 객체를 우선 삭제 후 지우도록 한다.

- 지우려고 하는 버킷은 이미지 파일이 있는 버킷이다.

BUCKET_NAME = 'your_bucket_name' # 버킷 이름

FILE_NAME = 'aws.png' # S3 파일 이름

display(BUCKET_NAME, FILE_NAME)

- 기존처럼 삭제를 하도록 해보려고 하면, 에러가 발생하는 것을 알 수 있다.

resource = boto3.resource('s3')

bucket_name = "your_bucket_name"

s3_bucket =resource.Bucket(bucket_name)

s3_bucket.delete()

print(" This {} bucket has been deleted ".format(s3_bucket))

---------------------------------------------------------------------------

ClientError Traceback (most recent call last)

Input In [18], in <cell line: 4>()

2 bucket_name = "your_bucket_name"

3 s3_bucket =resource.Bucket(bucket_name)

----> 4 s3_bucket.delete()

5 print(" This {} bucket has been deleted ".format(s3_bucket))

File /mnt/c/Users/human/Desktop/aws-lectures-wsl2/venv/lib/python3.8/site-packages/boto3/resources/factory.py:580, in ResourceFactory._create_action.<locals>.do_action(self, *args, **kwargs)

579 def do_action(self, *args, **kwargs):

--> 580 response = action(self, *args, **kwargs)

582 if hasattr(self, 'load'):

583 # Clear cached data. It will be reloaded the next

584 # time that an attribute is accessed.

585 # TODO: Make this configurable in the future?

586 self.meta.data = None

File /mnt/c/Users/human/Desktop/aws-lectures-wsl2/venv/lib/python3.8/site-packages/boto3/resources/action.py:88, in ServiceAction.__call__(self, parent, *args, **kwargs)

79 params.update(kwargs)

81 logger.debug(

82 'Calling %s:%s with %r',

83 parent.meta.service_name,

84 operation_name,

85 params,

86 )

---> 88 response = getattr(parent.meta.client, operation_name)(*args, **params)

90 logger.debug('Response: %r', response)

92 return self._response_handler(parent, params, response)

File /mnt/c/Users/human/Desktop/aws-lectures-wsl2/venv/lib/python3.8/site-packages/botocore/client.py:508, in ClientCreator._create_api_method.<locals>._api_call(self, *args, **kwargs)

504 raise TypeError(

505 f"{py_operation_name}() only accepts keyword arguments."

506 )

507 # The "self" in this scope is referring to the BaseClient.

--> 508 return self._make_api_call(operation_name, kwargs)

File /mnt/c/Users/human/Desktop/aws-lectures-wsl2/venv/lib/python3.8/site-packages/botocore/client.py:911, in BaseClient._make_api_call(self, operation_name, api_params)

909 error_code = parsed_response.get("Error", {}).get("Code")

910 error_class = self.exceptions.from_code(error_code)

--> 911 raise error_class(parsed_response, operation_name)

912 else:

913 return parsed_response

ClientError: An error occurred (BucketNotEmpty) when calling the DeleteBucket operation: The bucket you tried to delete is not empty

- 이럴 경우에는 우선 사용자 함수를 만들어서 모든 스토리지를 비워야 한다.

BUCKET_NAME = "your_bucket_name"

s3_resource = boto3.resource('s3')

s3_bucket = s3_resource.Bucket(BUCKET_NAME)

def clean_up():

# delete the object

for s3_object in s3_bucket.objects.all():

s3_object.delete()

# delete bucket versioning

for s3_object_ver in s3_bucket.object_versions.all():

s3_object_ver.delete()

print("S3 bucket cleaned")

clean_up()

S3 bucket cleaned

- 이번에 다시 삭제를 하도록 한다. 그러면, 정상적으로 삭제가 된 것을 확인할 수 있다.

s3_bucket.delete()

print(" This {} bucket has been deleted ".format(s3_bucket))

This s3.Bucket(name='your_bucket_name') bucket has been deleted

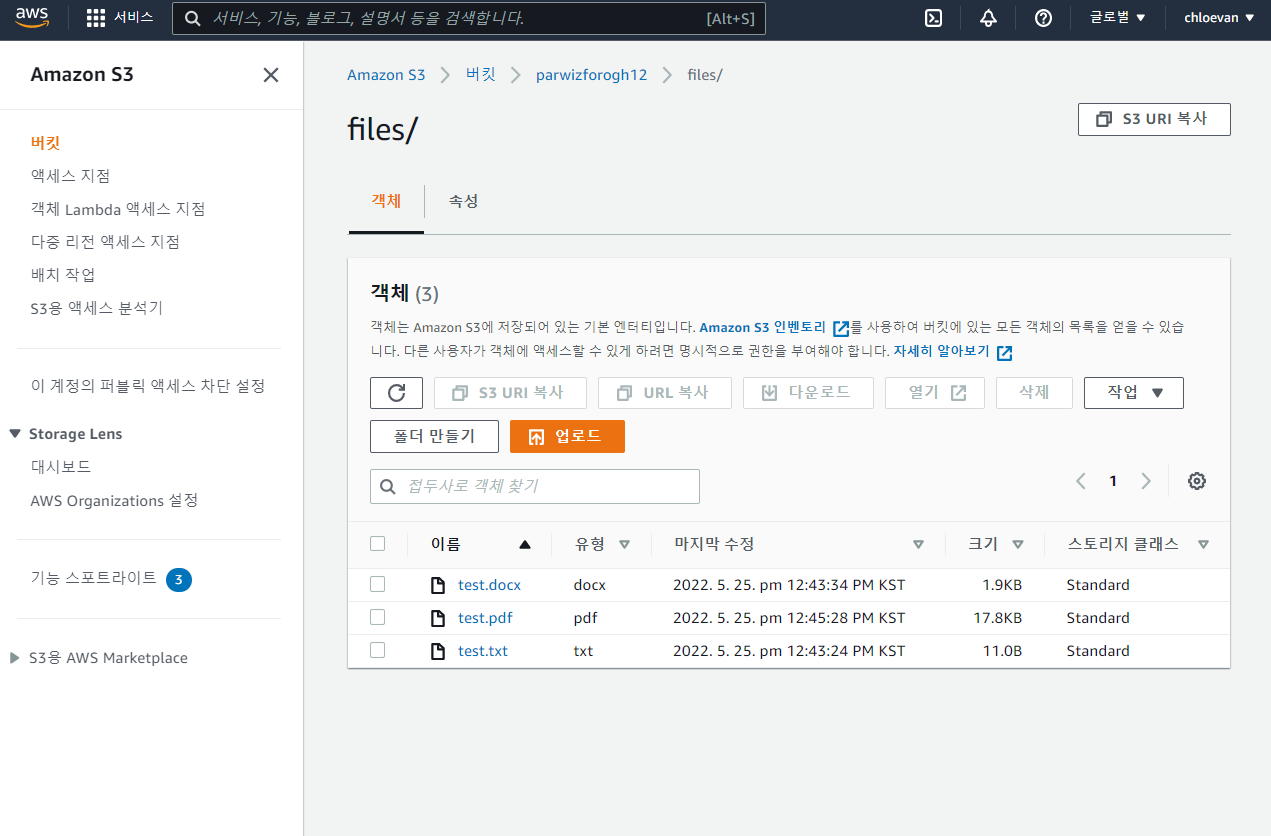

파일 업로드

- 저용량 pdf, txt 파일을 업로드해본다.

bucket = boto3.resource('s3')

response = bucket.create_bucket(

Bucket = "your_bucket_name",

ACL="private", # public-read

CreateBucketConfiguration = {

'LocationConstraint' : 'ap-northeast-2'

}

)

print(response)

s3.Bucket(name='your_bucket_name')

BUCKET_NAME = "your_bucket_name"

# client 방식

s3_client = boto3.client('s3')

def upload_files(file_name, bucket, object_name=None, args=None):

if object_name is None:

object_name = file_name

s3_client.upload_file(file_name, bucket, object_name, ExtraArgs=args)

print("{} has been uploaded to {} bucket".format(file_name, BUCKET_NAME))

'''

# uploading with resource

BUCKET_NAME = "your_bucket_name"

s3_client = boto3.resource('s3')

def upload_files(file_name, bucket, object_name=None, args=None):

if object_name is None:

object_name = file_name

s3_client.meta.client.upload_file(file_name, bucket, object_name, ExtraArgs=args)

print("{} has been uploaded to {} bucket".format(file_name, BUCKET_NAME))

upload_files("myfile.txt", BUCKET_NAME)

'''

'\n# uploading with resource\n\nBUCKET_NAME = "your_bucket_name"\ns3_client = boto3.resource(\'s3\')\n\ndef upload_files(file_name, bucket, object_name=None, args=None):\n if object_name is None:\n object_name = file_name\n\n s3_client.meta.client.upload_file(file_name, bucket, object_name, ExtraArgs=args)\n print("{} has been uploaded to {} bucket".format(file_name, BUCKET_NAME))\n\nupload_files("myfile.txt", BUCKET_NAME)\n'

import os

os.getcwd()

'/mnt/c/Users/human/Desktop/aws-lectures-wsl2/ch02_S3'

upload_files("files/test.txt", BUCKET_NAME)

files/test.txt has been uploaded to your_bucket_name bucket

upload_files("files/test.docx", BUCKET_NAME)

files/test.docx has been uploaded to your_bucket_name bucket

upload_files("files/test.pdf", BUCKET_NAME)

files/test.pdf has been uploaded to your_bucket_name bucket

- 실제로 저장이 되었는지 확인해본다.

Download Files

- 실제 저장된 파일을 다운로드 받도록 한다.

BUCKET_NAME = "your_bucket_name"

s3_resource = boto3.resource('s3')

s3_object = s3_resource.Object(BUCKET_NAME, 'files/test.pdf') # Bucket에 있는 파일명

s3_object.download_file('downloaded.pdf') # 로컬에 저장할 파일명

print("File has been downloaded")

File has been downloaded

Listing Files 불러오기

- 파일들을 불러오도록 한다.

BUCKET_NAME = "your_bucket_name"

s3_resource = boto3.resource('s3')

s3_bucket = s3_resource.Bucket(BUCKET_NAME)

print("Listing Bucket Files or Objects")

for obj in s3_bucket.objects.all():

print(obj.key)

Listing Bucket Files or Objects

files/test.docx

files/test.pdf

files/test.txt

- 그런데, 이번에는 txt 파일만 출력하도록 한다.

import boto3

BUCKET_NAME = "your_bucket_name"

s3_resource = boto3.resource('s3')

s3_bucket = s3_resource.Bucket(BUCKET_NAME)

print("Listing Filtered File")

for obj in s3_bucket.objects.filter():

if obj.key.endswith('txt'):

print(obj.key)

Listing Filtered File

files/test.txt

다른 버킷으로 복제

- 다른 버킷으로 복사하여 전달할 수 있다.

s3 = boto3.resource('s3')

# 새로운 버킷 생성

response = bucket.create_bucket(

Bucket = "parwizforogh2222",

ACL="private", # public-read

CreateBucketConfiguration = {

'LocationConstraint' : 'ap-northeast-2'

}

)

print(response)

s3.Bucket(name='parwizforogh2222')

copy_source = {

'Bucket':'your_bucket_name',

'Key':'files/test.pdf'

}

s3.meta.client.copy(copy_source, 'parwizforogh2222', 'files/test.pdf')

버킷에서 객체 지우기

- 파일 한개만 지울 때는 아래와 같이 코드를 생성한다.

client = boto3.client('s3')

response = client.delete_object(

Bucket = 'your_bucket_name',

Key='files/test.pdf'

)

print(response)

{'ResponseMetadata': {'RequestId': 'R5T506T05NNNTP95', 'HostId': 'eLZXf3j2+XRl7wlgBFZtKEyUAlv6GwYVoxBApRL8n354HSWZi2ELTljHltzUIn91GsR7djBWV5DrAckP0gBf8g==', 'HTTPStatusCode': 204, 'HTTPHeaders': {'x-amz-id-2': 'eLZXf3j2+XRl7wlgBFZtKEyUAlv6GwYVoxBApRL8n354HSWZi2ELTljHltzUIn91GsR7djBWV5DrAckP0gBf8g==', 'x-amz-request-id': 'R5T506T05NNNTP95', 'date': 'Wed, 25 May 2022 05:36:43 GMT', 'server': 'AmazonS3'}, 'RetryAttempts': 0}}

- 다중 파일을 삭제할 때는 아래와 같이 실행한다.

response = client.delete_objects(

Bucket = 'your_bucket_name',

Delete = {

'Objects':[

{

'Key':'files/test.txt'

},

{

'Key':'files/test.docx'

}

]

}

)

print(response)

{'ResponseMetadata': {'RequestId': 'YBQ5RKKF8NK96R4B', 'HostId': 'DyDjbVbW4e7qqIAX1ZemE0BEy9koWXIH+X5gQsUBp8lGUdvHclqrGcOlPdJDmB9RNOA7mlTZcsE=', 'HTTPStatusCode': 200, 'HTTPHeaders': {'x-amz-id-2': 'DyDjbVbW4e7qqIAX1ZemE0BEy9koWXIH+X5gQsUBp8lGUdvHclqrGcOlPdJDmB9RNOA7mlTZcsE=', 'x-amz-request-id': 'YBQ5RKKF8NK96R4B', 'date': 'Wed, 25 May 2022 05:38:07 GMT', 'content-type': 'application/xml', 'transfer-encoding': 'chunked', 'server': 'AmazonS3', 'connection': 'close'}, 'RetryAttempts': 0}, 'Deleted': [{'Key': 'files/test.docx'}, {'Key': 'files/test.txt'}]}